Networking’s Pivotal Role in AI Workloads

Artificial intelligence training and inference require enormous data exchanges among servers, graphics processing units, and high-performance storage arrays. Network bottlenecks can slow those operations dramatically, turning low-latency switching and high-throughput routing into mission-critical components of any modern AI cluster. Arista designs Ethernet switches and software platforms that move data quickly, reliably and, according to the firm, securely across clusters that may contain tens of thousands of interconnected devices.

Industry researchers forecast that spending on AI infrastructure will expand at a compounded annual rate above 20 percent through the end of the decade, with networking representing one of the fastest-growing subsegments. A recent International Data Corporation report highlighted that enterprises are shifting budgets toward lower-latency fabrics capable of handling the surge in model size and training complexity. Arista’s executives have repeatedly emphasized that demand for 400- and 800-gigabit Ethernet switching is outpacing earlier product cycles, mirroring the rapid adoption of GPU accelerators across cloud data centers.

Open Ecosystem Strategy

Rather than aligning exclusively with a single chip or server manufacturer, Arista promotes what it calls an open networking ecosystem. The company collaborates with silicon vendors, AI software developers and storage suppliers to certify performance across multiple hardware configurations. Management contends that the breadth of these partnerships reduces customer lock-in, simplifies procurement, and ensures that new features can be deployed rapidly as AI requirements evolve.

Key to that strategy is the firm’s EtherLink portfolio, a suite of platforms that unify network automation, telemetry, security and traffic management under one control plane. EtherLink fabrics are already deployed in several large-scale AI systems, including hyperscale clusters used for natural-language processing, computer vision and recommendation engines. By integrating orchestration and monitoring tools natively, Arista aims to minimize manual intervention, lower operational expenses and deliver consistent performance even at multi-terabit data rates.

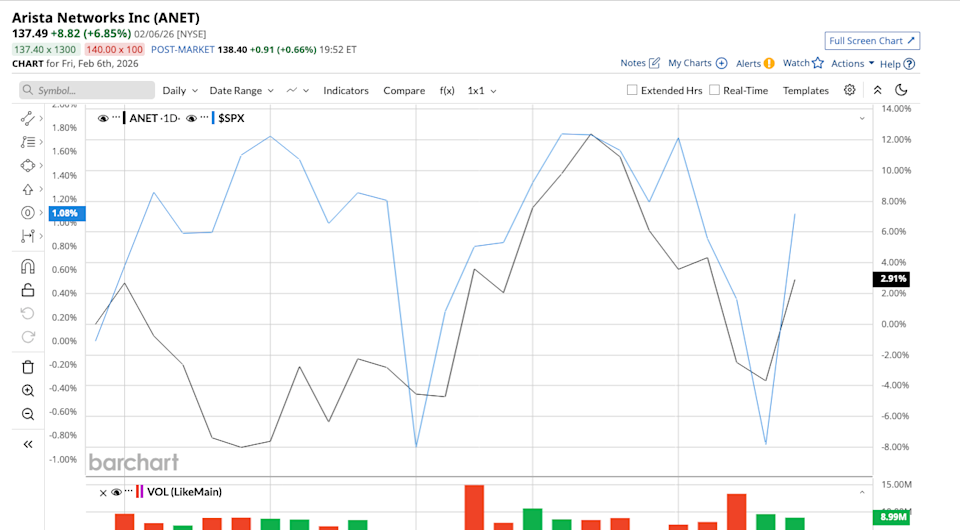

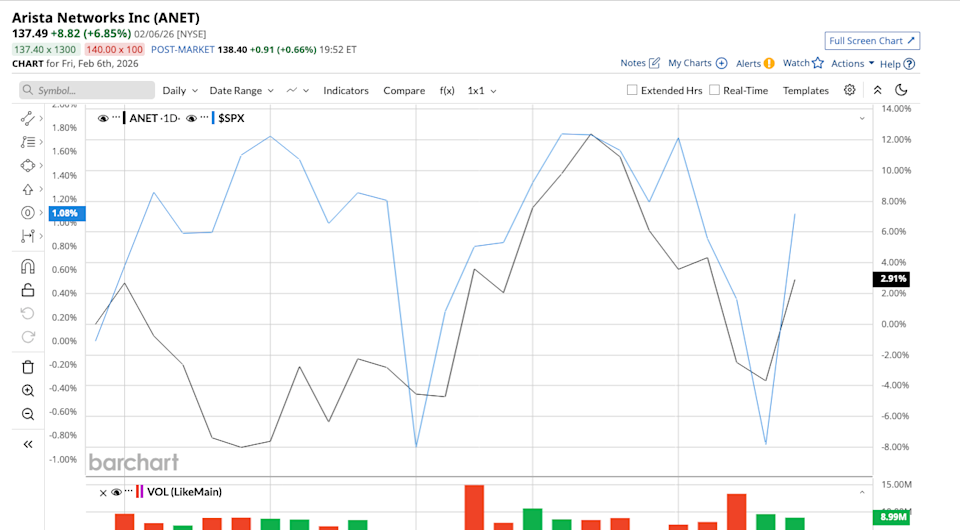

Premium Valuation Under Scrutiny

The company’s stock has outperformed broader market indices over the past year, buoyed by optimism that the surge in AI spending will translate into sustained revenue growth. That performance has pushed Arista’s valuation multiples well above historical averages for the networking sector, drawing attention to whether near-term results can justify the premium. Investors will parse the Feb. 12 earnings release for updated guidance on 2026 revenue, margin trends, and capital expenditures that could signal the durability of AI-related demand.

Imagem: Internet

Analysts are also focused on supply-chain dynamics, given ongoing tightness in certain high-speed optical components and merchant silicon. Any commentary about lead-time improvements or potential constraints could influence expectations for the first half of 2026. Additionally, progress in expanding sales outside North America will be monitored, as management has repeatedly expressed interest in capturing incremental share in Europe and Asia, where cloud providers are accelerating the build-out of local AI capacity.

Deferred Revenue Points to Continued Adoption

The jump to $4.7 billion in deferred revenue during the third quarter indicates that customers are reserving capacity ahead of formal rollout schedules. Such preorders often precede large-scale installations, particularly in scenarios where data-center operators need to synchronize networking upgrades with the arrival of new compute accelerators. For Arista, the backlog may provide a buffer against short-term macroeconomic fluctuations, but it also raises expectations that the company will convert those orders into realized revenue without significant delays.

While market attention frequently gravitates toward semiconductor designers and AI model developers, networking remains a foundational layer that enables efficient scaling. Arista’s fourth-quarter results will therefore serve as a litmus test not only for the company but also for the broader thesis that data-center networking is a primary beneficiary of AI adoption. A strong showing could reinforce confidence in continued capital investment across the sector; a shortfall might prompt questions about the pace at which organizations are translating AI ambitions into tangible infrastructure orders.

Management’s conference call following the earnings release is expected to address the competitive landscape, including pressure from incumbent switch vendors and emerging alternatives leveraging proprietary interconnect technologies. Insight into product road maps, particularly for 1.6-terabit Ethernet, could shape sentiment on Arista’s ability to maintain technology leadership as AI models grow even larger and more bandwidth-intensive.

Crédito da imagem: FOTOGRIN via Shutterstock